VAD Parameter Adjustment in Speech Recognition

Subtitles created during the speech recognition phase of video translation are sometimes very long, lasting tens of seconds or even minutes, while other times they are very short, less than 1 second. These can all be optimized by adjusting the VAD parameters.

What is VAD?

Silero VAD is an efficient Voice Activity Detection (VAD) tool that can identify whether audio contains speech and separate speech segments from silence or noise. Silero VAD can be used in conjunction with other speech recognition libraries (such as Whisper) to detect and segment speech segments before or after speech recognition, thereby optimizing the recognition effect.

In faster-whisper, VAD is used by default for speech analysis and segmentation. The following four parameters are mainly involved in controlling and adjusting the segmentation and recognition effect. These parameters are used to control the judgment and segmentation of speech and silence. Below are detailed explanations and setting suggestions for each parameter:

threshold

Meaning: Represents the speech probability threshold. Silero VAD outputs the speech probability of each audio segment. Probabilities above this value are considered speech (SPEECH), and probabilities below this value are considered silence or background noise.

Setting suggestions: The default value is 0.5, which is applicable in most cases. However, for different data sets, you can adjust this value to more accurately distinguish between speech and noise. If you find too many misjudgments, you can try increasing it to 0.6 or 0.7; if too many speech segments are lost, you can reduce it to 0.3 or 0.4.

min_speech_duration_ms (Minimum speech duration, unit: milliseconds)

Meaning: If the length of the detected speech segment is less than this value, the speech segment will be discarded. The purpose is to remove some short non-speech sounds or noise.

Setting suggestions: The default value is 250 milliseconds, which is suitable for most scenarios. You can adjust it as needed. If speech segments are too short and easily misjudged as noise, you can increase this value, for example, set it to 500 milliseconds.

max_speech_duration_s (Maximum speech duration, unit: seconds)

Meaning: The maximum length of a single speech segment. If a speech segment exceeds this duration, an attempt is made to split it at a silent point longer than 100 milliseconds. If no silent position is found, it will be forcibly split before the duration to avoid excessively long continuous segments.

Setting suggestions: The default is infinity (no limit). If you need to process longer speech segments, you can keep the default value; but if you want to control the segment length, such as processing dialogues or segmented output, you can set it according to your specific needs, such as 10 seconds or 30 seconds.

min_silence_duration_ms (Minimum silence duration, unit: milliseconds)

Meaning: The silence time to wait after speech is detected. The speech segment will be split only if the silence duration exceeds this value.

Setting suggestions: The default value is 2000 milliseconds (2 seconds). If you want to detect and split speech segments faster, you can reduce this value, such as set it to 500 milliseconds; if you want to split more loosely, you can increase it.

speech_pad_ms (Speech padding time, unit: milliseconds)

Meaning: The padding time added before and after the detected speech segment to avoid the speech segment being cut too tightly, which may cut off some edge speech.

Setting suggestions: The default value is 400 milliseconds. If you find that the cut speech segments have missing parts, you can increase this value, such as 500 milliseconds or 800 milliseconds. Conversely, if the speech segment is too long or contains too many invalid parts, you can reduce this value.

The specific settings of these parameters need to be optimized according to the speech data set and application scenarios you use. Reasonable configuration can significantly improve the performance of VAD.

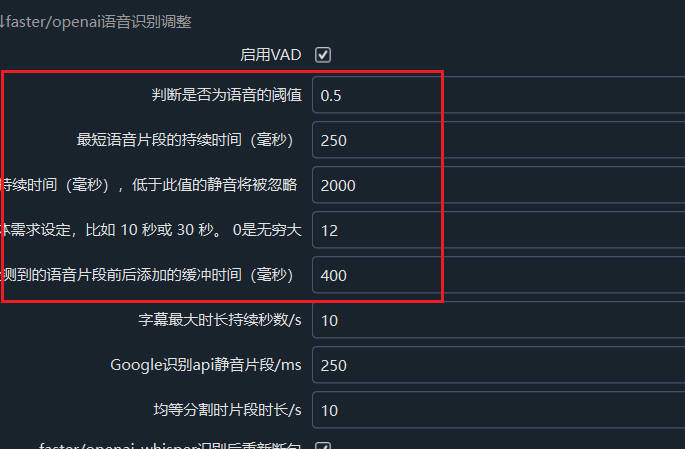

The above parameters can be modified and adjusted in Menu -- Tools/Options -- Advanced Options -- faster/openai. You can also select

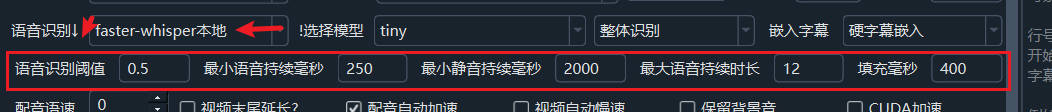

faster-whisper localafter speech recognition in the main interface, and click the "Speech Recognition" text on the left to display the modification text boxes for these parameters below.

Summary:

threshold: Can be adjusted according to the data set, the default value of 0.5 is more general.

min_speech_duration_ms and min_silence_duration_ms: Determine the length of the speech segment and the sensitivity of silent segmentation, fine-tune according to the application scenario.

max_speech_duration_s: Prevent unreasonable growth of long speech segments, usually set according to the specific application.

speech_pad_ms: Add a buffer to the speech segment to avoid over-cutting of the segment. The specific value depends on your audio data and the needs for speech segmentation.

The cleaner and clearer the sound is without noise, the better the recognition effect will be. Even carefully adjusted parameters are not as good as a clean background sound effect.