Why are the recognized subtitles uneven and messy - How to optimize and adjust?

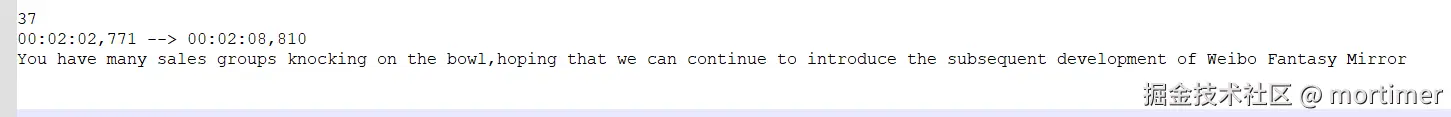

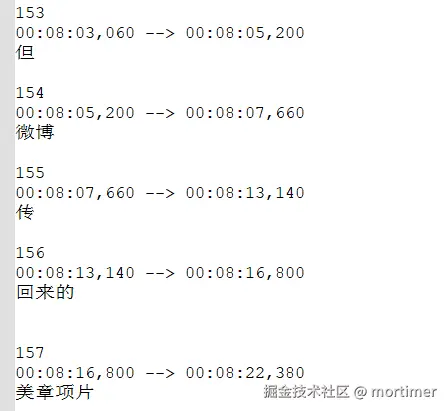

In the process of video translation, the automatically generated subtitles from the speech recognition stage are often not ideal. Either the subtitles are too long, almost filling the screen, or they only display two or three characters, appearing fragmented. Why does this happen?

Speech Recognition's Sentence Segmentation Standards

Speech Recognition:

When human speech is converted into text subtitles, sentence segmentation is usually based on silent intervals. Generally, the duration of silent intervals is set between 200 milliseconds and 500 milliseconds. Assuming it is set to 250 milliseconds, when silence is detected for 250 milliseconds, the program considers it the end of a sentence. At this point, a subtitle is generated from the previous ending point to here.

Factors Affecting Subtitle Quality

- Speaking Speed

If the speaking speed in the audio is fast, with almost no pauses, or pauses less than 250 milliseconds, the segmented subtitles will be very long, possibly lasting for tens of seconds, filling the screen when embedded in the video.

- Irregular Pauses:

Conversely, if there are unnecessary pauses while speaking, for example, pausing several times in the middle of a coherent sentence, the segmented subtitles will be very fragmented, possibly displaying only a few words per subtitle.

- Background Noise

Background noise or music can also interfere with the determination of silent intervals, leading to inaccurate recognition.

- Pronunciation Clarity: This is obvious; unclear pronunciation makes it difficult for even humans to understand.

How to Address These Issues?

- Reduce Background Noise:

If the background noise is high, you can separate human voices from background sounds before recognition to remove interference and improve recognition accuracy.

- Use a Large Speech Recognition Model:

When computer performance allows, try to use large models for recognition, such as large-v2 or large-v3-turbo.

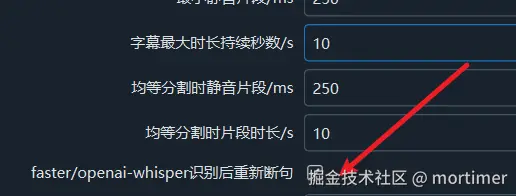

- Adjust Silent Interval Duration:

The software defaults to setting the silent interval to 200 milliseconds. Depending on the specific audio and video, you can adjust this value. If the video you want to recognize has a fast speaking speed, you can reduce it to 100 milliseconds; if there are many pauses, you can increase it to 300 or 500 milliseconds. To set this, open the Tools/Options menu, then select Advanced Options, and modify the minimum silent interval value in the faster/openai speech recognition adjustment section.

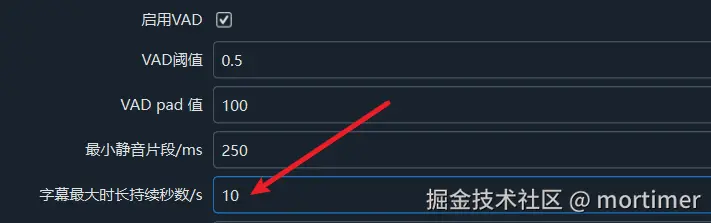

- Set Maximum Subtitle Duration:

You can set the maximum duration for subtitles; subtitles exceeding this duration will be forcibly split. This setting is also in the Advanced Options.

As shown, subtitles longer than 10 seconds will be re-segmented.

- Set Maximum Characters per Line of Subtitle:

You can set the maximum number of characters per line of subtitle; subtitles exceeding this character count will automatically wrap or be split.

- Enable Re-segmentation Feature: After enabling this option and combining it with settings 4 and 5 above, the program will automatically re-segment sentences.

After the above settings 3, 4, 5, and 6, the program will first generate subtitles based on silent intervals. When encountering overly long subtitles or excessive characters, the program will split the subtitles by re-segmenting. When re-segmenting, the program uses the nltk natural language processing library, combining silent interval duration, punctuation marks, subtitle character count, and other factors to make a comprehensive judgment before splitting.